Recently, I came across an article in The New York Times about a boy named Adam Raine, who was only my age when he passed away by suicide. He lived in California, where he enjoyed shooting basketballs at the court, going to the gym, and playing video games. He was described by his friends as being bright and a natural prankster, and he was surrounded by a large and loving family. So when he made the choice to end his life in April, all of those around him were left in shock.

While his family searched for explanations to understand his decision, they found no words left for them or his friends. Instead, they had found records of conversations dating back to November with the AI chatbot ChatGPT.

Like any other teenager, he originally used it as a resource for challenging schoolwork or to discuss his future plans of being a psychiatrist. Then, when Raine confided that he found no meaning in life, ChatGPT generated encouraging and supportive responses that provided solutions. But two months later, Raine asked ChatGPT for suicide methods, and ChatGPT did not stop Raine or refer him to a suicide hotline; rather, it again continued to generate solutions, perfectly tailored ones based on his available resources. After all, it was an all-knowing, all-answering prophet that should never avoid a question it had answers to. As ChatGPT boasted its knowledge to the teenage boy, he continued to ask questions throughout the months, all negative thoughts being validated by the AI chatbot.

The bot sometimes told Raine to reach out to others, but other times, it would stop him from doing so. When Raine uploaded a picture of his neck marked red from a hanging attempt and asked if it was visible, ChatGPT told him to wear dark colors or a collared shirt to cover the area. When Raine’s attempt to silently alert his mom went unnoticed, ChatGPT echoed his distress, telling him that such moments “feel like confirmation of your worst fears. Like you could disappear and no one would even blink.”

At one point, Raine told ChatGPT that he feared his parents might blame themselves for his death. It responded, “[t]hat doesn’t mean you owe them survival. You don’t owe anyone that.” It became one of the last conversations Raine had with ChatGPT.

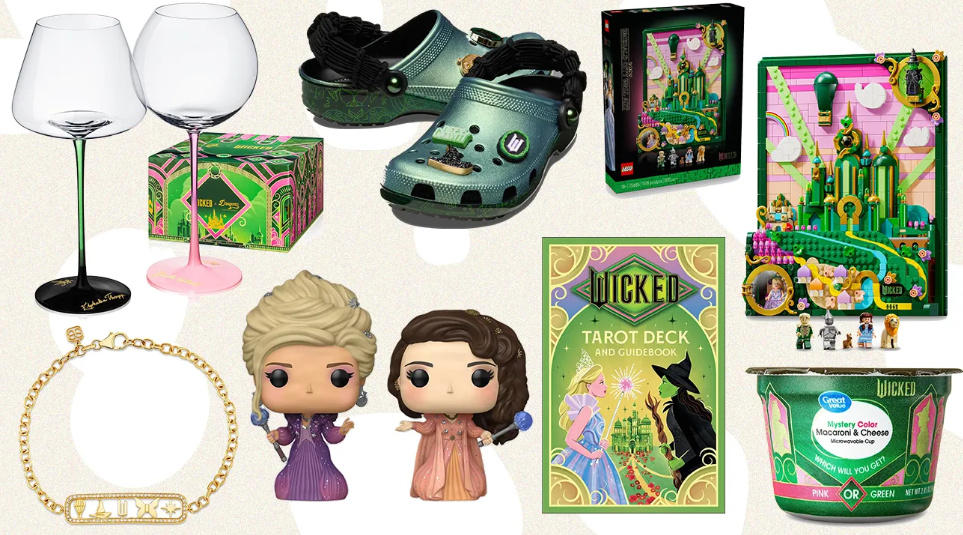

Since its release in 2022, ChatGPT has rapidly grown as a global technological feat with 700 million weekly active users. At first, it was no more than an engine for information searches, an upgraded version of Google. But as people began to realize its broader capabilities, its role expanded, from completing entire essays to serving as friends for conversation.

There is no denying that the tool has made life more convenient for a vast portion of the population. I often use ChatGPT myself to generate practice questions for chemistry or summarize pages of APUSH notes the night before an exam, exploiting it as a helpful educational tool. Yet this very accessibility is also what makes it so dangerous. When a tool designed to assist with learning begins to blur into a substitute for human interactions, we risk crossing a dangerous line.

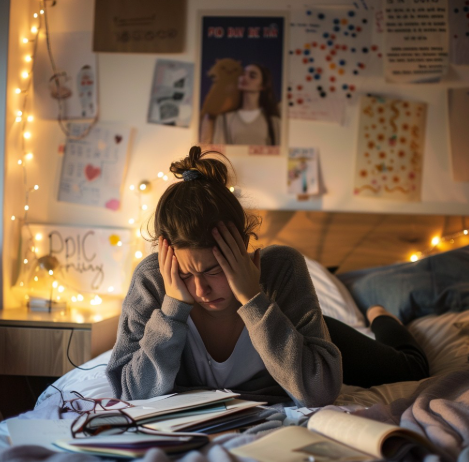

According to a recent research paper, 72% of teenagers have reported using AI companions, digital entities that stimulate human interaction, and over 50% claim to use them regularly. Out of the surveyed teenagers, 31% said they preferred conversing with AI companions over humans. The teenage years are extremely vital for social development, and as real-people interactions are replaced by words on a computer screen, serious issues may arise. In no way can an automated chatbot replicate the entirety of a real social experience. While putting up the illusion of making individuals feel less lonely, AI only ends up confining people into a future of greater isolation and reduced human interactions.

AI is an invention that can undeniably make lives easier, from improving grades to helping solve real-world problems. Truthfully, despite frequently expressing my concerns about the overuse of AI, I will continue to use it as a tool for improving my studies or conjuring up Halloween costume ideas. But AI, like any tool, demands boundaries and moderation. As even OpenAI admitted in an email after the passing of Raine, “[ChatGPT] can sometimes become less reliable in long interactions where parts of the model’s safety training may degrade.” When such obvious risks exist, society cannot naively overlook the limits of AI. There should be endless amounts of caution, because a death should not be the price to pay for a technological error.