Combating Deep Fakes and the Potential Threat of AI

October 21, 2020

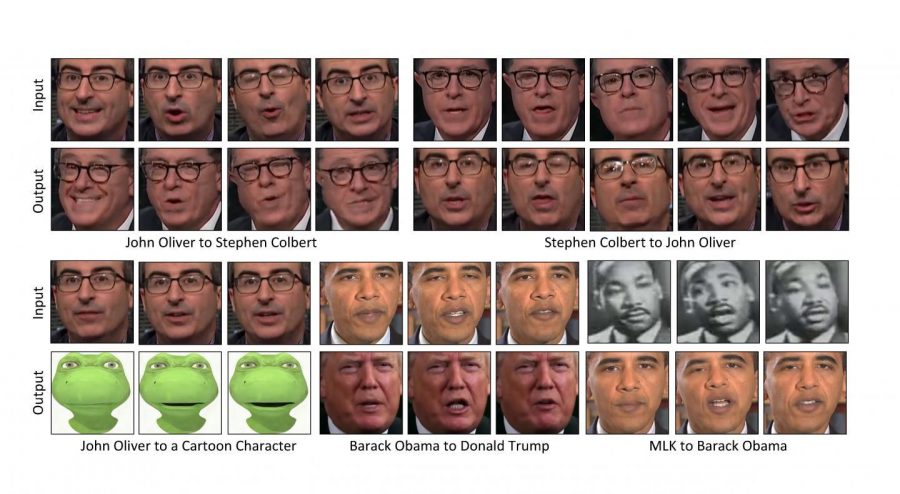

Artificial intelligence (AI) is currently a rapidly growing field. From agriculture to sports analytics, AI has shown to be useful for a wide variety of applications. Although many benefits come with developing and applying AI in our everyday lives, there are also many potential threats if AI is used with malicious intent. One prominent example is deep fakes, which are types of lip-sync technology that manipulate how a person says something. By using deep fakes, one can literally put words in people’s mouths.

Terminator, A Space Odyssey, Robocop — these are just some of the popular movies that are associated with evil AI taking over the world. Hollywood has constantly portrayed AI as a dangerous threat to humanity. Despite this, AI has shown to be extremely helpful and versatile. For example, YouTube uses AI to help recommend videos based on your viewing history. Another example can be seen in medicine, where AI has been used to accelerate drug discovery. The applications are endless. The problem with AI is not the stereotypical “gain control of humans and take over the world.” Instead, the problem is more about how people use it. Like many other things we use, it is essential to make sure people use it to help people and not harm them.

One of the most significant applications for AI is video editing and image generation. These techniques have been studied by researchers for several years but became popular to the public with the infamous thispersondoesnotexist.com website. Whenever a user refreshes the page, the website will see an image of a person created by an algorithm called Generative Adversarial Networks. As the website’s name suggests, the person’s image is entirely fake even though he or she seems eerily realistic.

Deep fakes are similar to image generation but focus more on editing an actual video. One prominent example of a deep fake is the video of an edited Obama speech. Jordan Peele, who is known for his comical impersonations, voiced over a deep fake video. Deep fakes essentially allow people to manipulate the way people talk—-everything from the facial expressions and the mouthing. They were initially created to help edit movies and commercials with scripting but now are a source of concern for many people.

To combat the threat of deep fakes, a team of AI experts at Stanford University and UC Berkeley created a detection tool that can help distinguish deep fake videos from normal videos. The researchers focused on finding irregularities in mouth formations from phonetic sounds. For example, pronouncing the letter “B” creates a unique shape with the mouth. Deep fakes tend to have discrepancies between the mouthing and phonetic sounds, allowing the algorithm to exploit this weakness. The algorithm the team made classified deep fakes of Obama with an accuracy of 90 percent.

Despite the impressive efforts of the team, the researchers emphasize that this is only a temporary solution. As deep fakes become more advanced, combating them becomes significantly more difficult. Because of this, it is important to stay vigilant of misinformation, deep faked, or not.