You Snooze You Lose: Tesla Drivers Fall Asleep at the Wheel

September 24, 2019

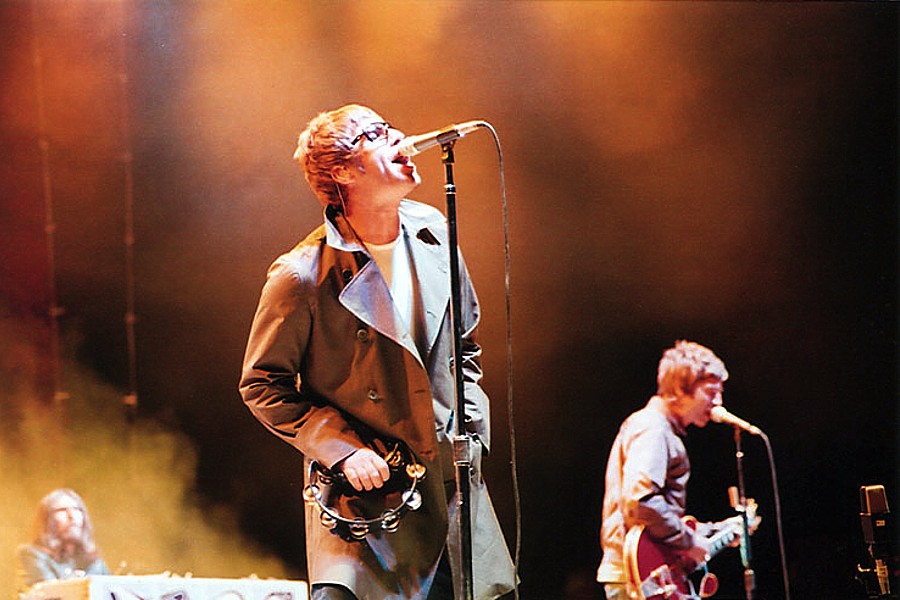

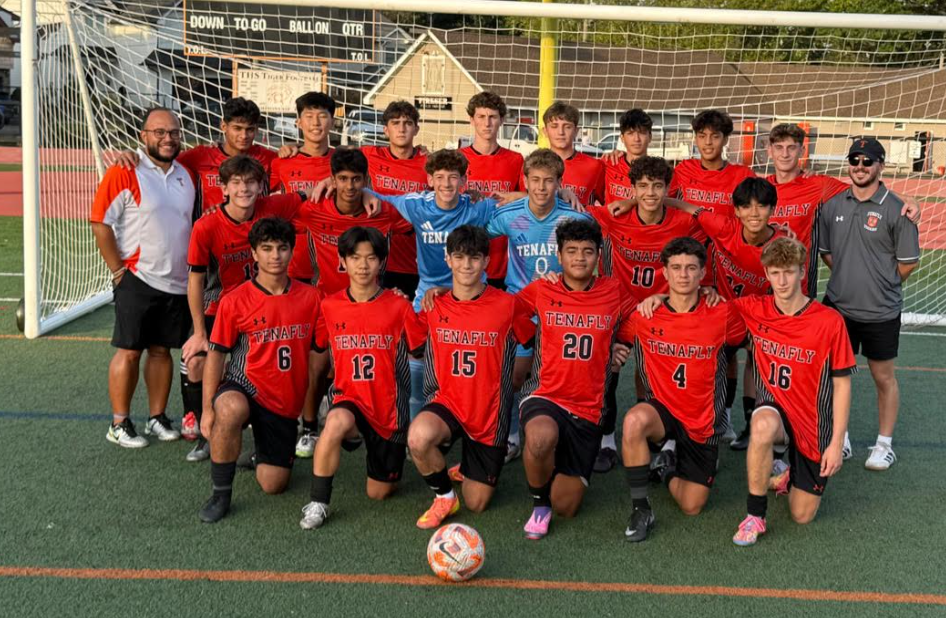

Last month, a Tesla driver was spotted asleep at the wheel one Monday afternoon on the Massachusetts Turnpike. As if that weren’t bad enough, the driver’s passenger appeared to have been passed out as well. This was revealed in a shocking Twitter video from user Dakota Randall on September 8th, 2019, at 3:13 PM. According to the New York Post, Randall guessed he and the Tesla driver were both going about 60 mph through Newton at the time. Unfortunately, this isn’t the first time an incident such as this has occurred, with numerous reports across the US of drivers doing the same or similar things, all while their vehicles were still in motion. These incidents all owe to one key feature of Tesla automobiles: the autopilot feature.

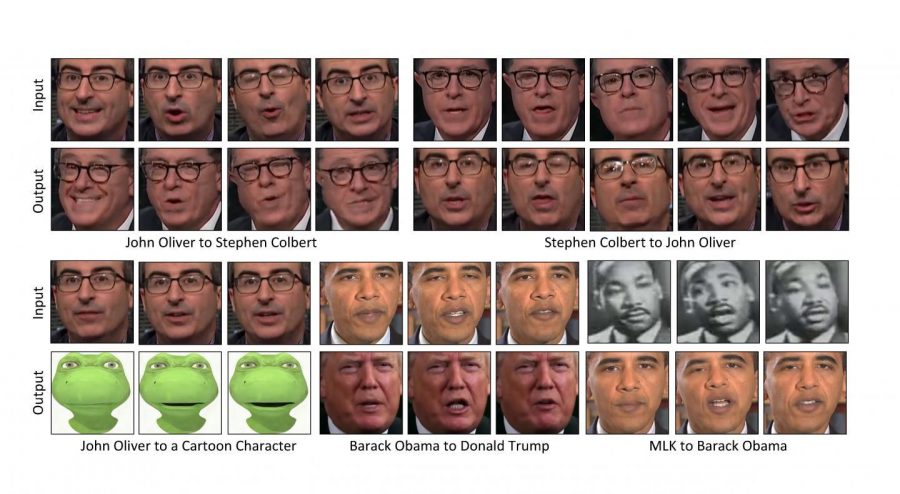

Elon Musk predicted on April 22nd, 2019, a showcase day to discuss the prospect of autonomous vehicles, that by 2020, “We will have more than one million robotaxis on the road. A year from now, we’ll have over a million cars with full self-driving, software…everything.” What we have now, however, is quite a bit different. To put it simply, Tesla’s current iteration of the “autopilot” system is more akin to a driver-assist system with added bells and whistles. Tesla itself responded in a statement regarding the autopilot system that it is “not a self-driving system.” In fact, there are actually several built-in safety features to encourage driver awareness, including a warning every 30 seconds if the driver-monitoring system does not detect hands on the wheel. This makes the Twitter video captured an odd case, as the driver appeared to be asleep at the wheel for a significant amount of time with the vehicle still in motion. According to Forbes, the Twitter user recording the video may have been “punked” in a dangerous prank of sorts. Whether or not this is true, it still begs the question, “Are self-driving cars safe?” Noam Yakar (‘21) said in an interview that “the systems that Tesla created aren’t fully autonomous, and thus, are not totally safe.” And with all the news coverage of bizarre incidents such as this occurring, he worries that legislation may soon restrict self-driving cars before they truly become a reality on the road.

There have been several fatal accidents involving Tesla’s autopilot feature as well, with common factors in all incidents being driver inattentiveness, combined with the failures of the system to slow or stop the vehicle. Sean Chia (‘21) voiced that “[He] believe[s] it will be tremendously difficult to have totally automated self-driving cars due to the random things that happen on the road due to human error, stupidity, or just bad luck,” in a sort of attestation to Murphy’s Law. Those who are familiar with the law will know “anything that can go wrong will go wrong.” However, he does not believe this spells the end of the prospect of automated vehicles. As he puts it, “the drivers in these incidents simply lacked the common sense to not take a nap while cruising at dangerous speeds.” The ones who should bear the ultimate responsibility in these incidents are the drivers themselves, who did not realize before it was too late that the driving task leaves no room for complacency. The self-driving car industry pioneered by Tesla has taken major setbacks, and Elon Musk’s plans of having totally automated cars on America’s roads by 2020 may not come to full fruition just yet.

Some guy literally asleep at the wheel on the Mass Pike (great place for it).

Teslas are sick, I guess? pic.twitter.com/ARSpj1rbVn

— Dakota Randall (@DakRandall) September 8, 2019